Caching is a highly prevalent and important concept in computer science. Time and time again I have seen it come up in different modules I have taken. This article serves as a recap to all of them.

CS2100: Caching between CPU and main memory

In CS2100 we are introduced to the concept of caching with respect to the CPU and the memory. As the analogy goes, suppose you are the CPU while the books on the library shelves are the memory devices, then caching something would mean to have the books temporarily placed on the table instead of you having to constantly go to the shelves. This essentially reduces time taken for retrieving information.

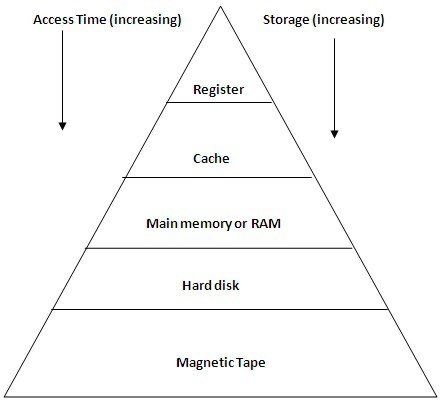

You may have heard the terms L1, L2 and L3 caches which are exactly the type of caches the module was talking about. Sitting between the register and the main memory, they are crucial for faster access time, reduced memory latency and better data locality. This brings us to the concept of memory hierarchy which is a fundamental way to organize memory in computers in order to achieve a balance between access speed, capacity and cost.

We also went into how these caches can be implemented differently by studying two major types of caches: direct mapped cache and set associative cache. We then moved on to learning the tradeoffs of different replacement policies and when they may be suitable. There is no silver bullet for every scenario just like everything else in computer science.

CS2106 Operating Systems: Caching inside the CPU - Translation Lookaside Table

Towards the end of the OS module we started learning about memory paging. Think about how a computer process’s memory should be allocated on the main memory device. The most intuitive approach would be to put all the memory of a process together, in a contiguous chunk. However this presents a lot of problems such as available memory forming “holes” and difficulty in managing and allocating memory to new processes.

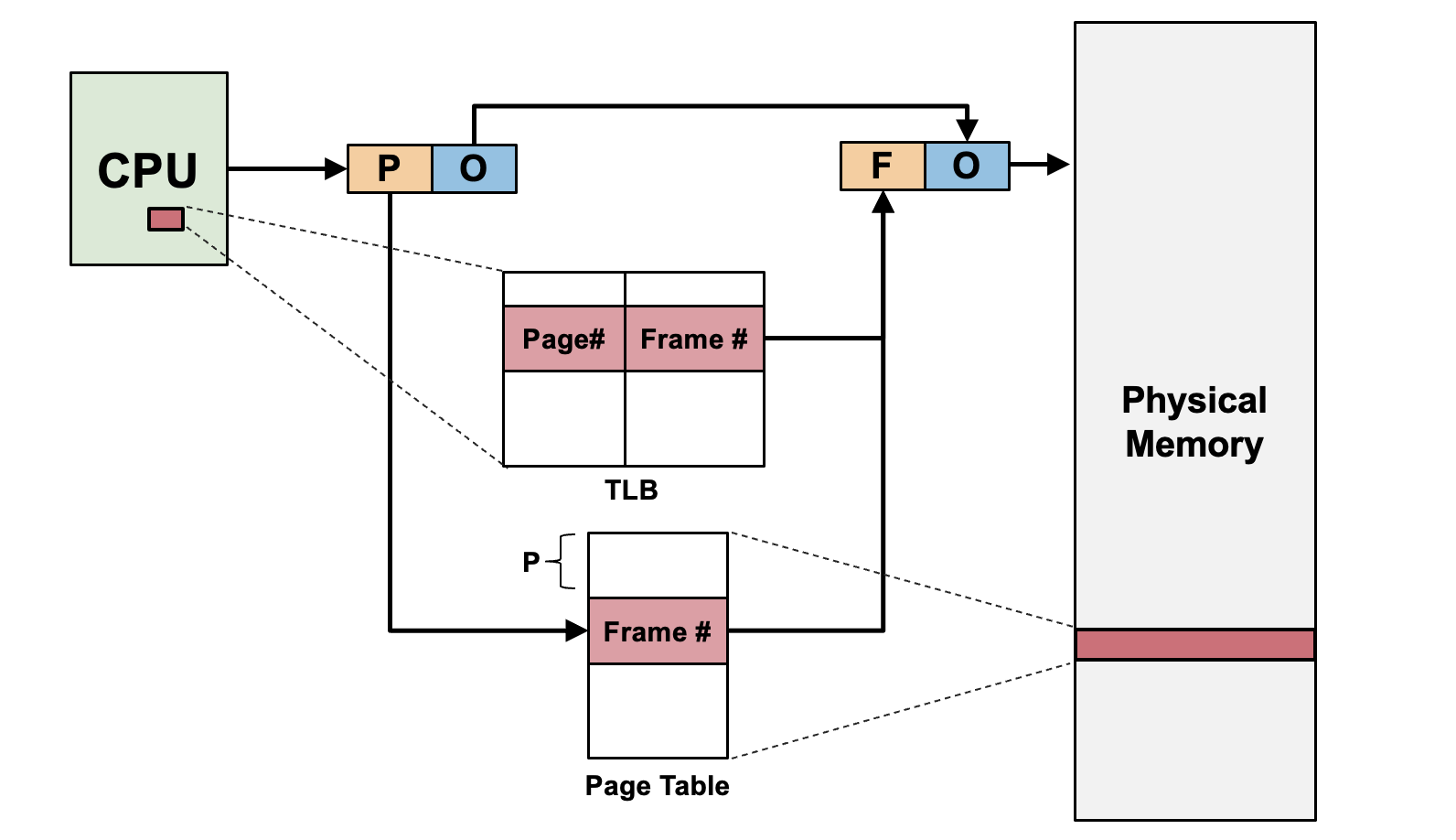

To solve this, paging scheme is introduced which allows processes to have its logical memory disjointly allocated on the physical memory device. In order to do this, each process needs a map to map logical memory address to physical memory address and this map is called the paging table. There is just one big problem with this: each memory access now requires two physical memory accesses because the paging table is itself stored in memory!

This is where the Translation Lookaside Tables (TLB) comes in to save the day. It is a specialized cache that is built into modern CPUs to support paging. It is small in size but extremely fast and very effective in improving memory access time due to the principle of temporal locality. When the CPU tries to fetch a page of data, it will first check the TLB before checking the page table as seen in the diagram below.

CS2105 Computer Networks

In computer networks we touched on the the concept of caching frequently at various level of the network stack!

Proxy server web cache

Firstly, when we were learning about application layer protocols and were introduced to the client-server model and caching on both client and servers. Proxy servers is an example of caching on the web. This are servers that sit between the client and the origin server often in a geographically closer location and return the cached response to the client if the content of the cached response is not stale. It reduces the overall latency of the responses.

Server-side caching

Server side caching, famously the use of redis, while not introduced in the module itself is yet another example of caching. One of the main idea behind the use of redis is to reduce database queries which can be orders of magnitude slower than fetching data from a cache storage like redis.

Client-side caching

Client-side caches are an important concept as well. These are caches that web browsers like Google Chrome stores in order to reduce unnecessary network requests, customize our user experience and also customize the server’s response to our requests. Famously, cookies and localStorage are the most commonly used caches in the browser.

DNS caching

DNS caching is yet another example of caching. DNS caching is done on every computer and it is a way for the device to remember DNS records locally without having to do DNS lookup for recently visited websites. This can greatly improve the overall response time as iterated DNS lookup which involves multiple network requests to multiple servers can be quite slow.

ARP table

Finally, Address Resolution Protocol (ARP) cache was also introduced to us towards the end of the module as we navigate through the link layer of the network stack. ARP cache or ARP table is a small table that contains the mappings of IP address to MAC address of other network devices in the same subnet. I will not go into the details of what are subnets and MAC address but the key idea is that computer devices use IP address to locate and message each other on the internet but the internet is itself made up of individual links. Transfer of messages between links requires the sender to know the receiver’s MAC address. A device would need to remember which MAC address to send a message to for a given IP address and this is where ARP table comes in. If there is no ARP table, the device would need to do an ARP broadcast for every network request targeted at an IP address which is highly inefficient.